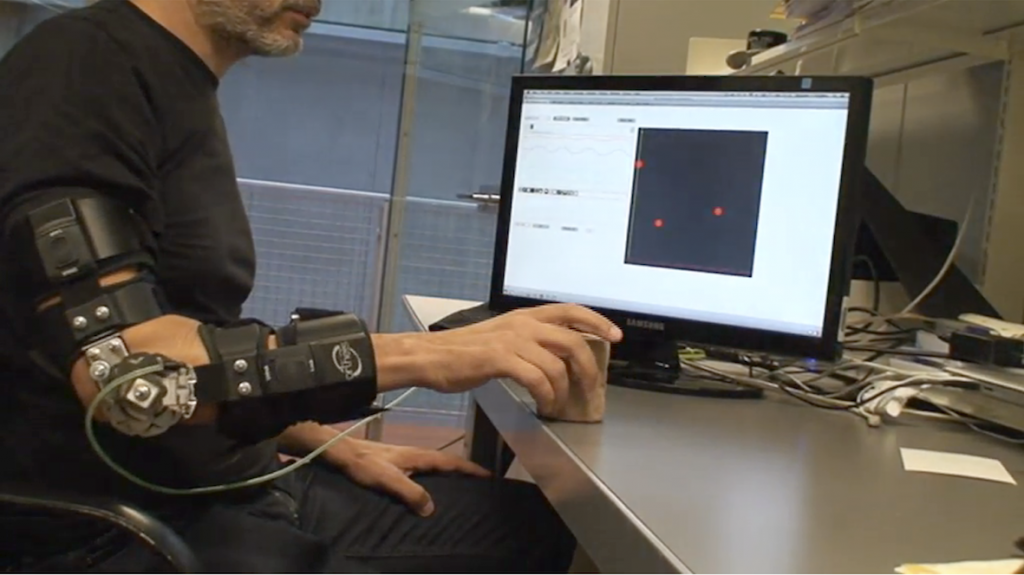

The virtual bath tub is an interactive virtual surface in the air the user can interact with, as if there were a bath tub filled will water in front of him.

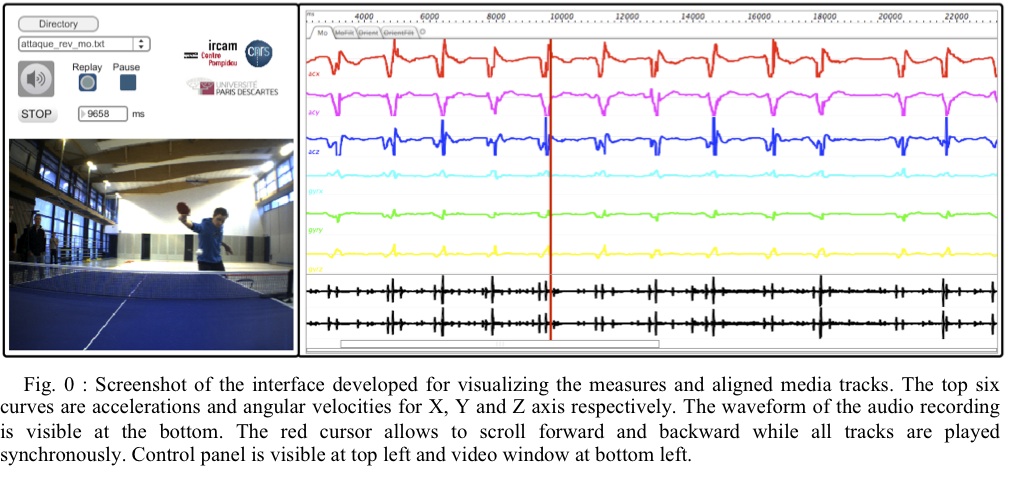

Inspired from foley artists issues to manipulate water, this scenario allows playing with splash and underwater sound sequences related to the energy of the movement.

Conception: Eric O. Boyer.

Motion capture with Leap Motion Device.

Sound synthesis made with MuBu objects (www.ismm.ircam.fr).

Sound materials by Roland Cahen and Diemo Schwarz (Topophonie Project).