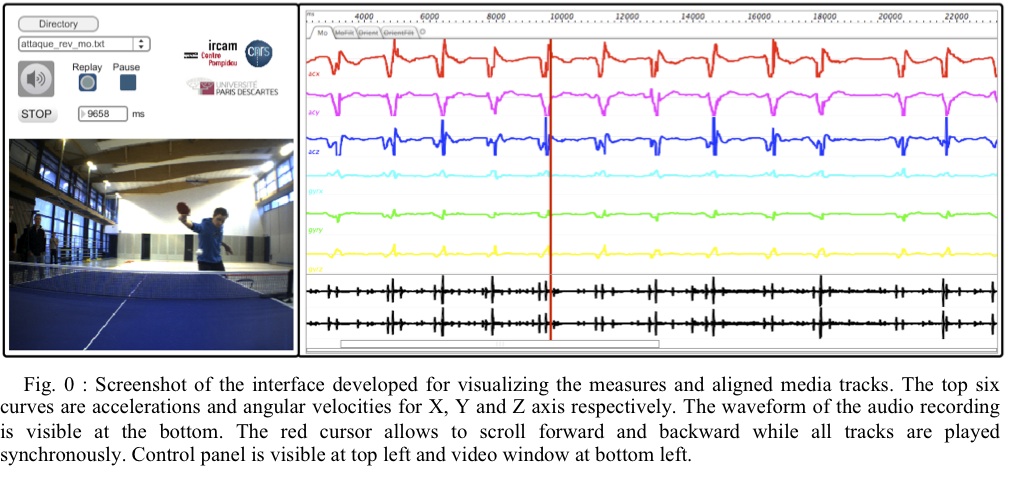

The paper Low-cost motion sensing of table tennis players for real time feedback by Eric Boyer, Frédéric Bevilacqua, François Phal and Sylvain Hanneton has been published in the International Journal of Tennis Table no 8. This publication follows the presentation at the Sport Science Congress

Author Archives: admin

Workshop HC2

Summer School 2013 Human Computer Confluence, http://hcsquared.eu

Workshop From everyday objects to sonic interaction design

July 17-19 2013, Ircam, PDS and IMTR research teams (collaboration with Goldsmiths-London)

Lauren Hayes – PhD student in creative music practice

Emmanouil Giannisakis – Master student in digital media engineering

Jaime Arias Almeida – PhD student in informatics

Alberto Betella – PhD student in communication, information and audiovisual media

David Hofmann Phd student in theoretical neuroscience

Sport Science Congress

Paper accepted for the conference 13th International Table Tennis Federation Sport Science Congress in Paris, May 12-13 2013

A potential application of sonification for learning specific Table Tennis gestures will be explained and demonstrated.

E. Boyer, F. Bevilacqua, F. Phal, and S. Hanneton, “Low-cost motion sensing of table tennis players for real time feedback”,13th International Table Tennis Federation Sport Science Congress in Paris, May 12-13 2013.

Progress in Motor Control IX

Two posters are accepted for the conference Progress in Motor Control IX meeting to be held at McGill University in Montreal, July 14-16 2013.

- E. Boyer, Q. Pyanet, S. Hanneton, and F. Bevilacqua, “Sensorimotor adaptation to a gesture-sound mapping perturbation”

- S. Hanneton, E. Boyer, and V. Forma, “Influence of an error-related auditory feedback on the adaptation to a visuo-manual perturbation”

Inauguration day of LABEX SMART

The following poster was presented during the inauguration day of the LABEX SMART, March 26 2013

It reports on results of the Legos project and on the collaboration between the STMS Lab IRCAM-CNRS-UPMC and ISIR-UPMC

E. O. Boyer, F. Bevilacqua, S. Hanneton, A. Roby-Brami, S. Hanneton, J.Francoise, N. Schnell, O. Houix, N. Misdariis1, P. Susini and I. Viaud-Delmon, Sensorimotor Learning in Gesture-Sound Interactive Systems

Front. Comput. Neurosci.

From ear to hand: the role of the auditory-motor loop in pointing to an auditory source

- 1 CNRS UMR 9912 IRCAM, France

- 2Laboratoire de Neurophysique et Physiologie, CNRS UMR 8119, Université Paris Descartes UFR Biomédicale des Saints Pères, France

- 3Neurobiologie des Processus Adaptatifs, CNRS UMR 7102, UPMC, France

- 4Institut des systèmes intelligents et de robotique (ISIR) CNRS UMR 7222, UPMC, France

Studies of the nature of the neural mechanisms involved in goal-directed movements tend to concentrate on the role of vision. We present here an attempt to address the mechanisms whereby an auditory input is transformed into a motor command. The spatial and temporal organization of hand movements were studied in normal human subjects as they pointed towards unseen auditory targets located in a horizontal plane in front of them. Positions and movements of the hand were measured by a six infrared camera tracking system. In one condition, we assessed the role of auditory information about target position in correcting the trajectory of the hand. To accomplish this, the duration of the target presentation was varied. In another condition, subjects received continuous auditory feedback of their hand movement while pointing to the auditory targets. Online auditory control of the direction of pointing movements was assessed by evaluating how subjects reacted to shifts in heard hand position. Localization errors were exacerbated by short duration of target presentation but not modified by auditory feedback of hand position. Long duration of target presentation gave rise to a higher level of accuracy and was accompanied by early automatic head orienting movements consistently related to target direction. These results highlight the efficiency of auditory feedback processing in online motor control and suggest that the auditory system takes advantages of dynamic changes of the acoustic cues due to changes in head orientation in order to process online motor control. How to design an informative acoustic feedback needs to be carefully studied to demonstrate that auditory feedback of the hand could assist the monitoring of movements directed at objects in auditory space.

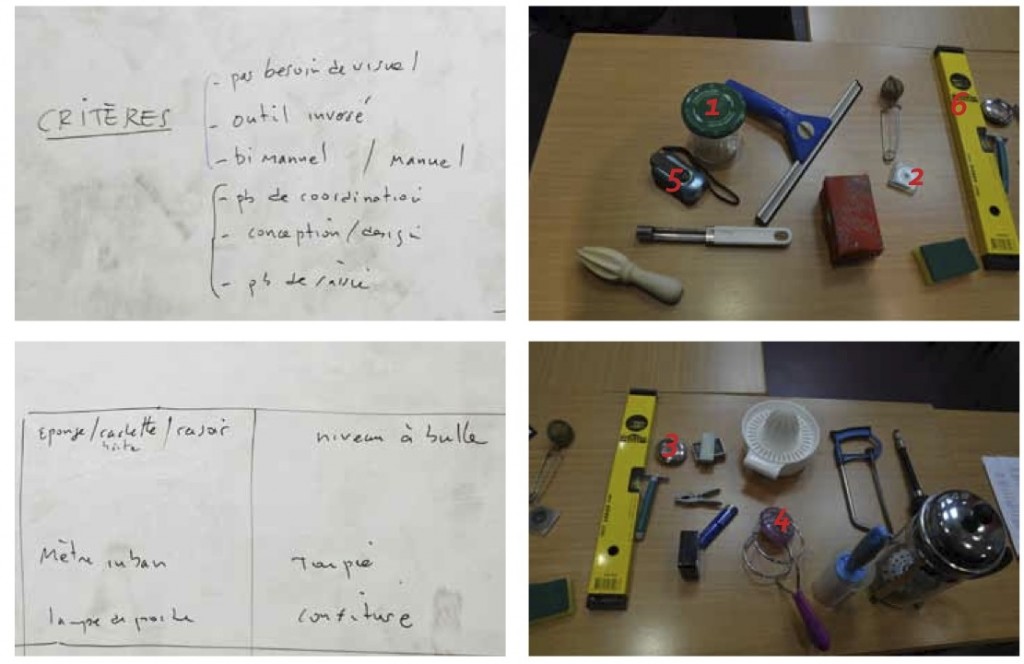

Workshop Participatory Design

We held a two-day workshop at IRCAM, May 30th and June 1st 2012, to brainstorm on possible sonification of every-day objects. Every participants brought two objects, that were analyzed and discussed. On the second workshop day, experiments using sensors and real-time synthesis were carried-on on a selection of objects.

More information can be found in the publication:

Houix, O., Gutierrez, F., Susini, P., & Misdariis, N. (2013). Participatory Workshops: Everyday Objects and Sound Metaphors. In Proc. of the 10th International Symposium on Computer Music Multidisciplinary Research (p. 41‑53). Marseille, France: PUBLICATIONS OF L.M.A.

SMC conference

Paper accepted at the 9th Sound and Music Computing Conference, 12-14 July 2012, Aalborg University Copenhagen

Franc ̧oise, J., Caramiaux, B., and Bevilacqua, F. (2012). A hierarchical approach for the design of gesture-to-sound mapping. In Proceedings of the 9th Sound and Music Computing Conference (SMC’12), pages 233–240, Copenhagen, Denmark.

Abstract: We propose a hierarchical approach for the design of

gesture-to-sound mappings, with the goal to take into account multilevel time structures in both gesture and sound

processes. This allows for the integration of temporal mapping strategies, complementing mapping systems based

on instantaneous relationships between gesture and sound

synthesis parameters. As an example, we propose the implementation of Hierarchical Hidden Markov Models to

model gesture input, with a flexible structure that can be

authored by the user. Moreover, some parameters can be

adjusted through a learning phase. We show some examples of gesture segmentations based on this approach, considering several phases such as preparation, attack, sustain,

release. Finally we describe an application, developed in

Max/MSP, illustrating the use of accelerometer-based sensors to control phase vocoder synthesis techniques based

on this approach.

Kick-off meeting

November 08-2012 at IRCAM (1:30 pm – 4 pm)¶

Ircam: Frederic Bevilacqua (coordinator), Hugues Vinet (scientific director), Patrick Susini (Head of PDS tem) , Nicolas Misdariis, Olivier Houix, Norbert Schnell, Emmanuel Fléty, Joël Bensoam, Isabelle Viaud-Delmon, Jules Françoise

Neuromouv : Sylvain Hanneton, Agnès Roby-Brami, Nicolas Rasamimanana (Phonotonic)

ANR: Olivier Couchariere

Summary

General presentation of the project (slides), F. Bevilacqua

Présentation of Olivier Coucharière of ANR

Presentation of the scientific aims for the three different types of applications:

The general idea of the LEGOS project is to fertilize interdisciplinary expertise in gesture-controlled sound systems with neurosciences, especially regarding sensori-motor learning. We believe that sensori-motor learning are not sufficiently considered for the development of interactive sound systems. A better understanding of the sensori-motor learning mechanisms involved in gesture-sound coupling should provide us with efficient methodologies for the evaluation and optimization of these interactive systems.

Such advances would significantly expand the usability of today’s gesture-based interactive sound systems, often developed empirically.

- Gesture learning or rehabilitation: the task is to perform a gesture guided by an audio feedback. The sensori-motor learning in this case is assessed in terms of the gesture precision and repeatability

- Movement-based sound control: The task is to produce a given sound through the manipulation of a gestural interface, as in digital musical instruments. The sensori-motor learning is assessed in terms of sound production quality.

- Interactive Sound Design: The task is to manipulate an object or a tangible interface, that is sonified. The sensori-motor learning in this case is assessed through the quality and understanding of the objet manipulation.