The goal of the Legos project was precisely to study the effect of auditory feedback for learning gestural interactive systems. In this context, sensori-motor learning has been significantly less considered than visual feedback. The Legos project was conceived as interdisciplinary, building from expertise in sound/music technology and in neuroscience on motor control. Beyond music, numerous applications such as rehabilitation, sensory substitution, and movement learning in sport are concerned by potential benefits of interactive auditory feedback.

Gestural interaction is nowadays part of human computer interfaces, from mobile to game interfaces. Moreover, motion sensing can be easily integrated in objects and tools, which enable the emergence of new uses, in particular with connected objects (internet of things). Learning and mastering new gestures represent therefore a central research topic for the implementation of novel interfaces and tangibles objects.

Project Results

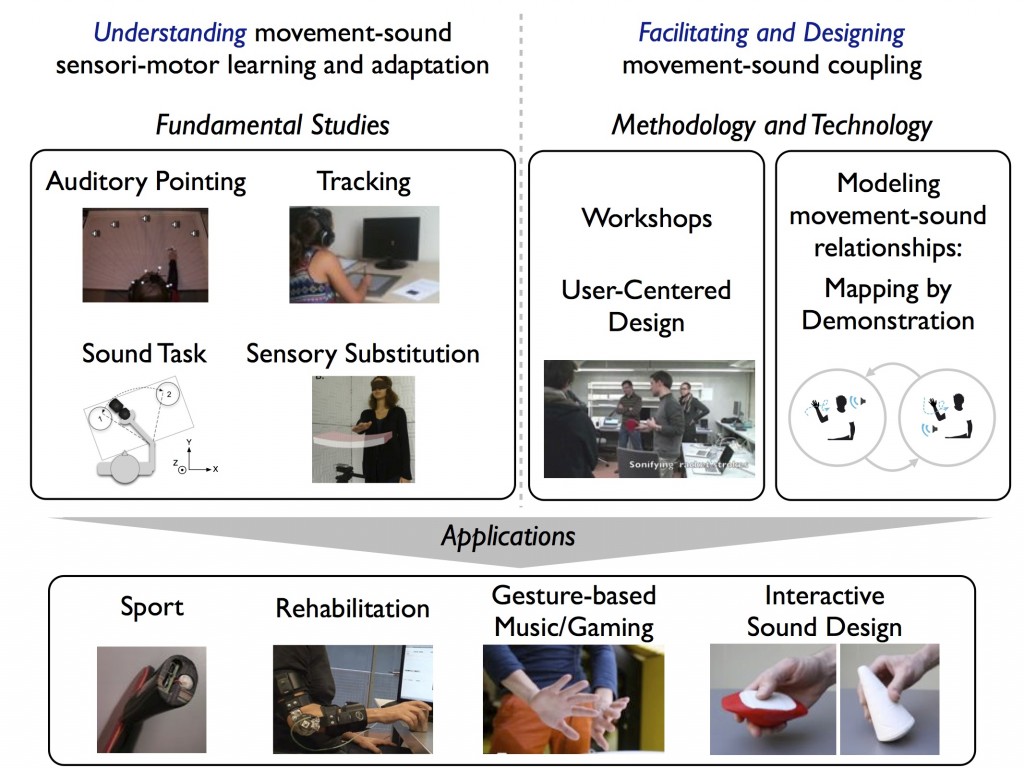

First, we studied auditory feedback using an experimental approach considering several contexts: from simple tasks, pointing or visuo-motor tracking, to more complex tasks where interactive sound feedback can guide movements, or cases of sensory substitution where the auditory feedback can inform on object shapes. Second, based on participatory workshops, we developed methodologies for designing the sound feedback. Finally, we also proposed a method called “mapping by demonstration”, which greatly facilitates the programming and design of the sound feedback in interactive systems. This method was demonstrated at the Emerging Technologies in Siggraph’14.

The project resulted in more than 26 publications and communications in international conferences (5 journal articles, 2 book chapters, 19 conference proceedings or communications), and several are currently in submission. Two PhD thesis, defended in 2015, were associated to the project. Software and prototypes were built and will be used in further research and applications. Our results allow us to pursue several applications in music, interactive sound design, rehabilitation, sensory substitution and sport.

With this project, we contributed to the development of research community on movement sonification. We established an important network of collaborations, including ISIR from Université Pierre et Marie Curie, UFR STAPS from Université Paris Descartes, Institut du cerveau et de la Moëlle Epinière, École normale supérieure, University College of London, and Simon Fraser University. In particular, we will pursue application in rehabilitation in the framework of the Laboratory of Excellence Labex SMART (in collaboration with ISIR) with COMUE Sorbonne Université. At the end of the project we organised 2-day international workshop with renowned experts of the field.

The Legos project was a fundamental research project coordinated by Ircam, in partnership with “Laboratoire de Neurophysique et Physiologie” then with “Laboratoire de Psychologie de la Perception” (UMR CNRS – Université Paris Descartes). Le project started in October 2011 and has lasted for 42 months. It was supported by ANR by a fund of 429’806 € for a global cost of 1’046’905 €. The project was labelled by Cap Digital, the french business cluster for digital content and services.